Modulate Drum Samples

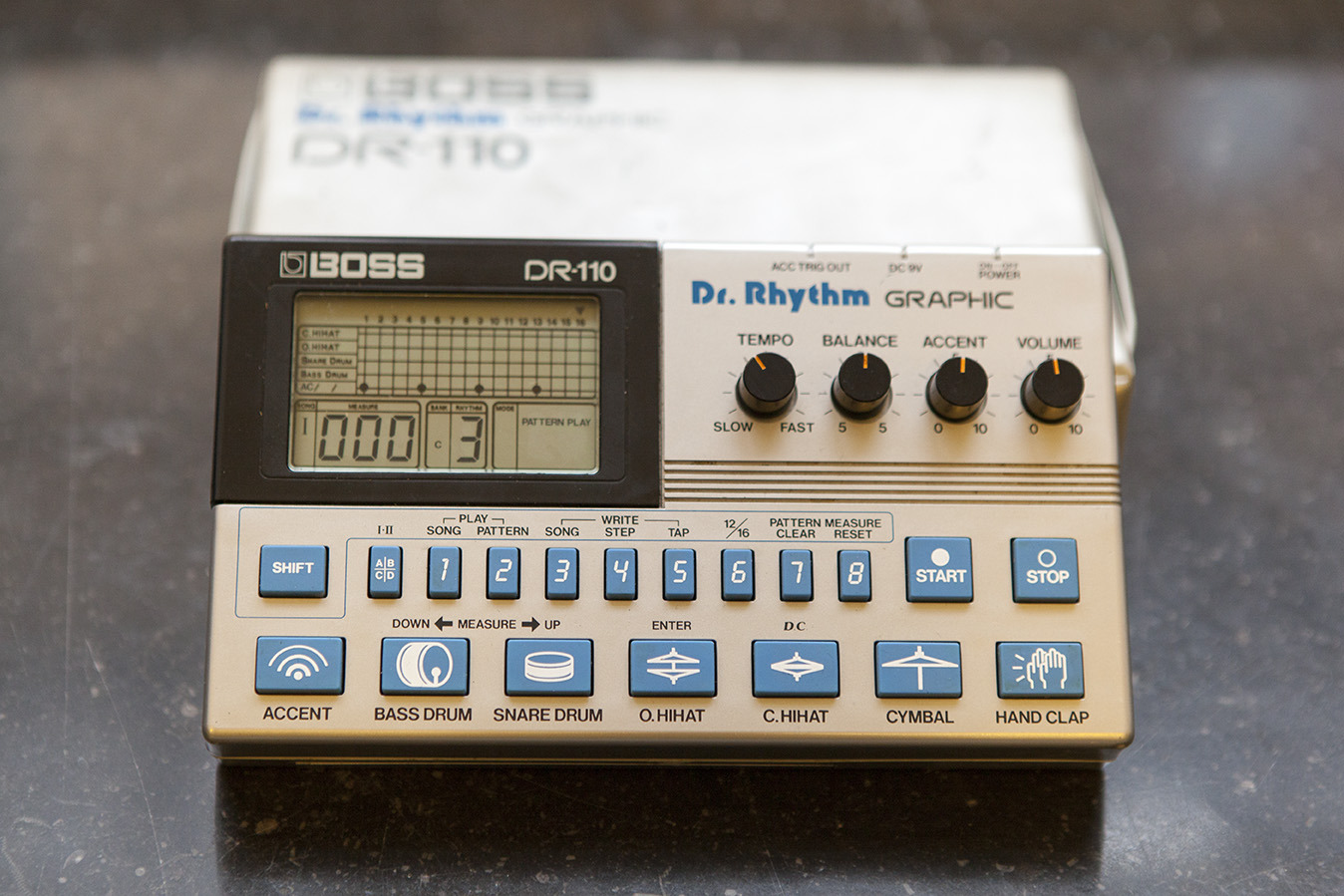

I got a few old drum machines, some analog and some digital. Sometimes I use theme with their internal sequencers synced to the tracks rooted in the DAW. I do this because working with hardware and limitations can be fun and inspiring.

Still, most times it could feel a little time-consuming and inconvenient. So I sample and set up a Drum Rack in Ableton Live, which is much more practical but at the same time could generate a more stiff and lifeless expression when using fixed samples.

To remedy this you could take some steps. You could use several samples of the same drum and switch playback in a round-robin style (read this tutorial on the Ableton website).

Another technique I usually tend to is setting a randomized velocity on the hihats for accents. In Ableton Live:

- Add a Drum Rack to a MIDI track.

- Put a hihat sample on a pad.

- Before the Simpler, add the Velocity MIDI effect.

- Set Random <20 (greater than 20 could affect the overall volume in bad way, and an added compressor later on could try to even out the intended small modulations).

Also try add a groove to your pattern.

- Goto Grove in your MIDI clip.

- Click om the hot-swap button.

- Choose whatever you like, e.g. MPC 16 Swing-58 is really nice.